Projects

The AI Objectives Institute’s goal is to enhance collective agency, by building the tools that are critical to the differential development of beneficial technology, and by developing paradigms and strategies to help others do the same.

Our projects span both theoretical research and applied work: our tools support reflection and coherent intent at individual, collective, and institutional scales, while our research explores concrete visions for the beneficial use of technology.

Featured Projects

Talk to the City is an LLM survey tool to improve collective discourse and decision-making – by analyzing detailed qualitative responses to a question instead of limited quantitative data. The project enables automatic analysis of these qualitative reports at a scale and speed not previously possible, helping policymakers discover unknown unknowns and specific cruxes of disagreement. We are incubating this to responsibly serve a variety of use cases – from democracy and union decision-making to understanding the needs of recipients in refugee camps and conflicting groups in a peace mediation setting.

The AI Supply Chain Observatory project (AISCO) works to improve our shared ability to respond to supply chain disruptions by identifying early warning indicators and root causes of harmful and catastrophic disruption. Additionally, AISCO offers a platform for practitioners to share and publish practices that strengthen supply chains and improve resiliency. The Observatory’s focus includes systemic risks associated with panic-buying, bullwhips, event-driven supply shocks, accelerated market volatility, AI-facilitated price arbitrage and Black Swan events.

AI, Markets, and Economic Impacts

Our ongoing theoretical research on the intersection of AI and market economies seeks to characterize, forecast, and mitigate the downside risks of increasing automation of production.

A recent paper, AI Governance through Markets (arXiv), argues that market governance mechanisms should be considered a key approach in the governance of artificial intelligence (AI), alongside traditional regulatory frameworks.

Our ongoing Machina Economica series explores analogues in technological disruption and the commodification of its risks. Read Part I, Part II; Part III on diffusion dynamics forthcoming.

Transformative Simulations Research (TSR) uses wargaming and advanced simulations to model how human individuals, groups, and societies may respond to the development of transformative artificial intelligence (TAI) capabilities.

By rigorously simulating potential TAI scenarios from multiple perspectives, TSR aims to provide policymakers and technologists with invaluable foresight for steering advanced AI toward robustly beneficial outcomes that maintain societal cohesion and resilience.

Aligning technology to human objectives requires understanding those objectives, and the virtues and values behind them. Through this work, we hope to find answers to the questions of what it means for an AI to represent moral virtue and values, and what components are required to build such systems. The aim is both making LLM-represented values transparent, and building tools using cutting-edge computational approaches to social reasoning.

At a high level, we have identified three main components required for this work:

A separable representation of value weights and semantic weights, implementing semantics with an LLM basis and quantitative value representation in reinforcement-learning system that interacts with it;

An LLM-based semantic representation that is tuned to capturing moral semantics as validated by psychological research;

A moral reasoning component implemented as a probabilistic generative model, which is learned by interpreting individual natural language utterances via an LLM, but achieving explicit relational learning and reasoning in an interpretable, robust framework.

We adopt a method similar to the rational meaning construction method described in Wong et al 2023 to build generative models from natural language statements using an LLM-based translator.

Early work on this project was presented at NeurIPS in 2023 as Leshinskaya and Chakroff (2023), Value as Semantics: Representations of Human Moral and Hedonic Value in Large Language Models

Other Projects

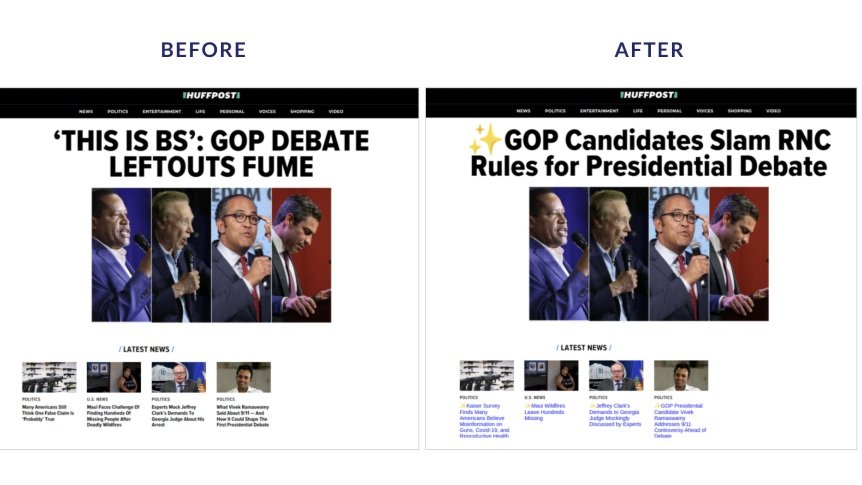

Lucid Lens

Lucid Lens seeks to equip individuals as they engage in an ever evolving technological space to help maintain their autonomy and see through the distortions around them. Enabling people to understand the broader context and more coherently orient towards the systems and information around us. As people understand what motives or buttons are being leveraged, they can orient accordingly. Understanding when an agent, article or system has an underlying goal that isn’t transparent is a first step towards creating better agents.

Read the overview on our blog and try the Chrome Plugin for yourself!

Research Agenda for Sociotechnical Approaches to AI Safety

As the capabilities of AI systems continue to advance, it is increasingly important that we guide the development of these powerful technologies, ensuring they are used for the benefit of society.

Existing work analyzing and assessing risks from AI spans a broad and diverse range of perspectives, including some which diverge enough in their motivations and approaches that they disagree on priorities and desired solutions. Yet we find significant overlap among these perspectives’ desire for beneficial outcomes from AI deployment, and significant potential for progress towards such outcomes in the examination of that overlap. In this research agenda, we explore one such area of overlap: we discuss areas of AI safety work that could benefit from sociotechnical framings of AI, which view AI systems as embedded in larger sociotechnical systems, and which explore the potential risks and benefits of AI not just as aspects of these new tools, but as possibilities for the complex interactions between humans and our technologies.

Markets as Learning Optimizers

Comparative study of optimization in AI and social systems, such as markets and corporations, can be a rich source of insight into the dynamics of each. Many optimization and alignment questions in one domain have counterparts in the other: markets clear through a process akin to gradient descent, and Goodhart's Law is equivalent to model overfitting.

Anders Sandberg (FHI, AOI) and Aanjaneya Kumar (PhD IISER Pune, AOI Fellow) are working on a preliminary mathematical result demonstrating that, under reasonable assumptions, market economies are structurally very similar to artificial neural networks (see this section of our whitepaper). We expect that this work will identify abstractions that hold true for optimizers across different contexts and scales, and will suggest alignment strategies that generalize. This paper also extends the late Peter Eckersley's work on the subject.