Articles

Talk to the City Case Study: Amplifying Youth Voices in Australia

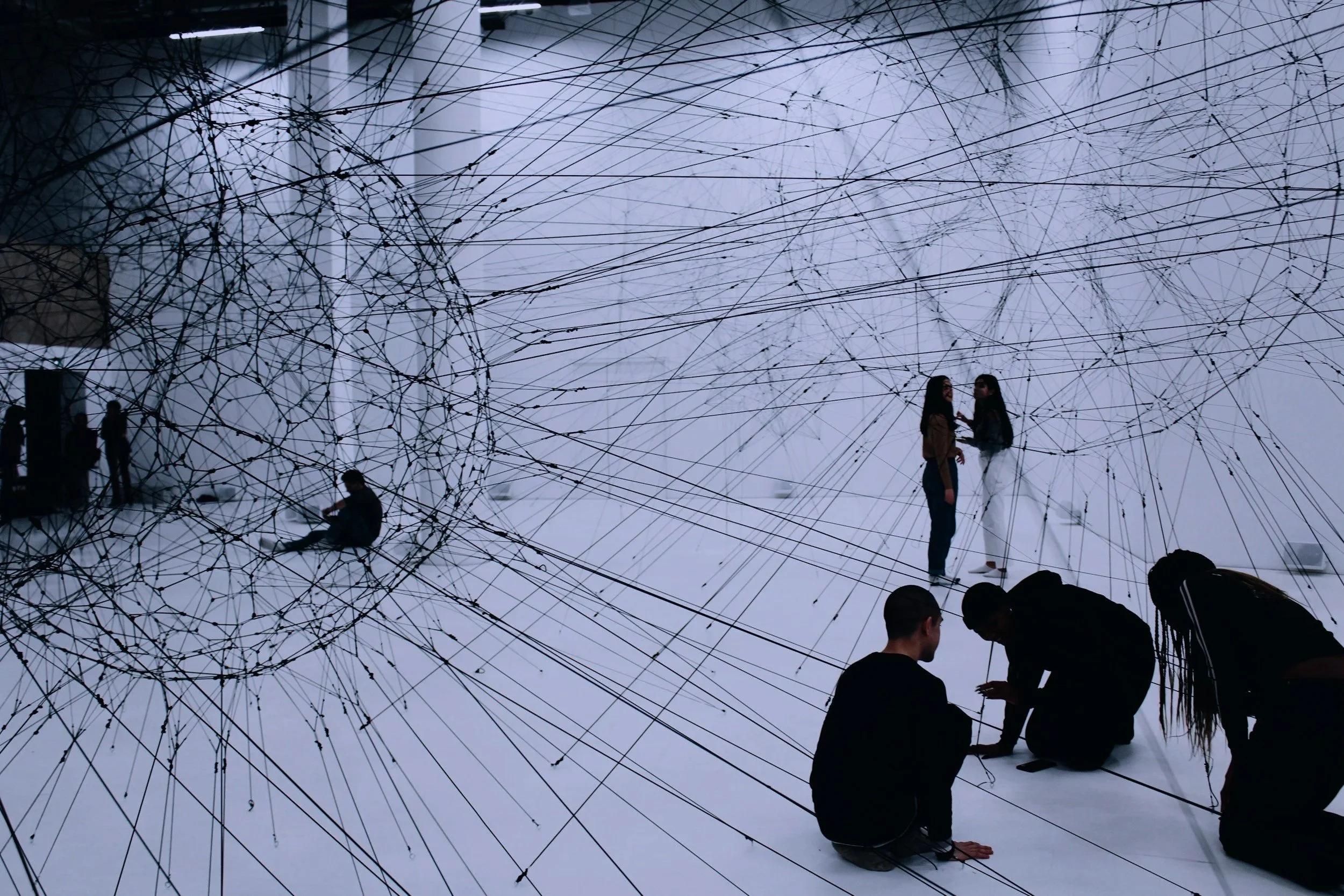

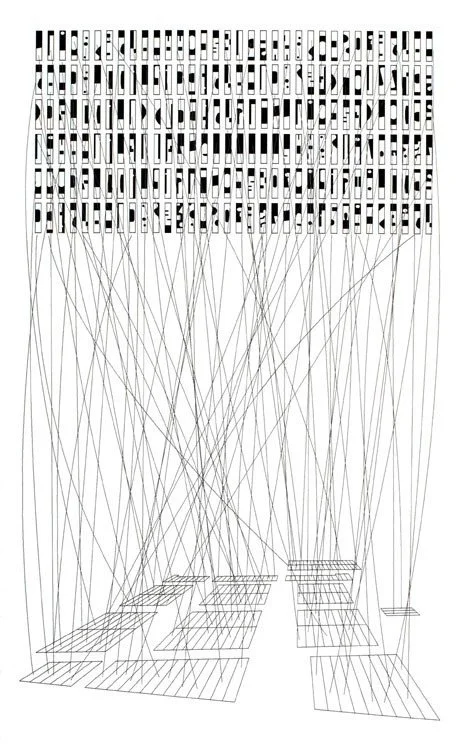

YWA deployed Talk to the City (T3C) as one of their research platforms, introducing an innovative interface that bridged the gap between large-scale data collection and qualitative insight. The platform's key innovation lay in its interactive visualization interface, which allowed researchers to dynamically explore relationships between different data points while maintaining direct access to individual testimonies. T3C's user-centered design enabled seamless integration of 85 hour-long video interviews. This unique approach preserved the context and nuance of personal experiences while enabling pattern recognition across the broader dataset.

The Diffusion Dilemma

Technological progress is often equated with invention, yet history shows that invention alone rarely transforms society without effective diffusion. This paper examines the persistent “diffusion dilemma,” the lag between the emergence of general-purpose technologies and their broad, productive adoption. Drawing on historical examples such as the delayed uptake of tractors and industrial electrification, it argues that institutional, cultural, and infrastructural factors, rather than technical potential, govern the pace of transformation. Applying this framework to contemporary artificial intelligence, the paper contends that capability development has outpaced our mechanisms for integration and governance. It introduces the concept of wrappers, the connective layer that translates foundational models into usable, value-aligned systems, and proposes that the central challenge of innovation today lies not in creating more powerful technologies but in designing the social and organizational conditions that allow them to diffuse safely and meaningfully.

Generative AI and Labor Market Outcomes: Evidence from the United Kingdom

This paper examines the effects of large language models (LLMs) on UK labor market outcomes, comparing outcomes across firms and occupations from 2021 to 2025 based on their differential exposure to LLM capabilities. Highly exposed firms reduce employment, particularly in junior positions, and sharply curtail new hiring, with technical roles (software engineers, data analysts) experiencing the steepest declines. The observed effects concentrate almost entirely in high-wage segments, suggesting LLMs may compress wage inequality.

Amplifying transformative potential while designing augmented deliberative systems

To be effective, augmented deliberation must preserve people's ability to genuinely engage with and be transformed by the deliberative process. This article proposes the Goldilocks Framework for Augmented Group Intelligence, and argues that the optimal use of AI in deliberation is about balancing between two critical elements: participants’ agency in steering and explaining deliberative outcomes, and participants’ commitment to those outcomes.

From Voluntary Guidelines to Enforceable Standards

The EU's AI Act is transitioning from voluntary guidelines to enforceable standards in August 2025, with Codes of Practice serving as interim compliance measures that are technically voluntary but effectively mandatory since they grant regulatory presumption of conformity. These standardization frameworks are increasingly integrated into market mechanisms like insurance and procurement, creating a dual approach where both regulatory mandates and economic incentives drive AI risk management.

AI Governance through Markets

This paper argues that market governance mechanisms should be considered a key approach in the governance of artificial intelligence (AI), alongside traditional regulatory frameworks. We examine four emerging vectors of market governance, demonstrating how these mechanisms can affirm the relationship between AI risk and financial risk while addressing capital allocation inefficiencies.

Gradual Disempowerment: Systemic Existential Risks from Continuous AI Development

This paper examines the systemic risks posed by incremental advancements in artificial intelligence, developing the concept of ‘gradual disempowerment’, in contrast to the abrupt takeover scenarios commonly discussed in AI safety.

Wargaming as a Research Method for AI Safety: Finding Productive Applications

As AI capabilities advance, we need robust methods to explore complex scenarios and their implications. This post explores when wargaming is most effective as a research tool for AI safety, and which types of problems are best suited to this methodology.

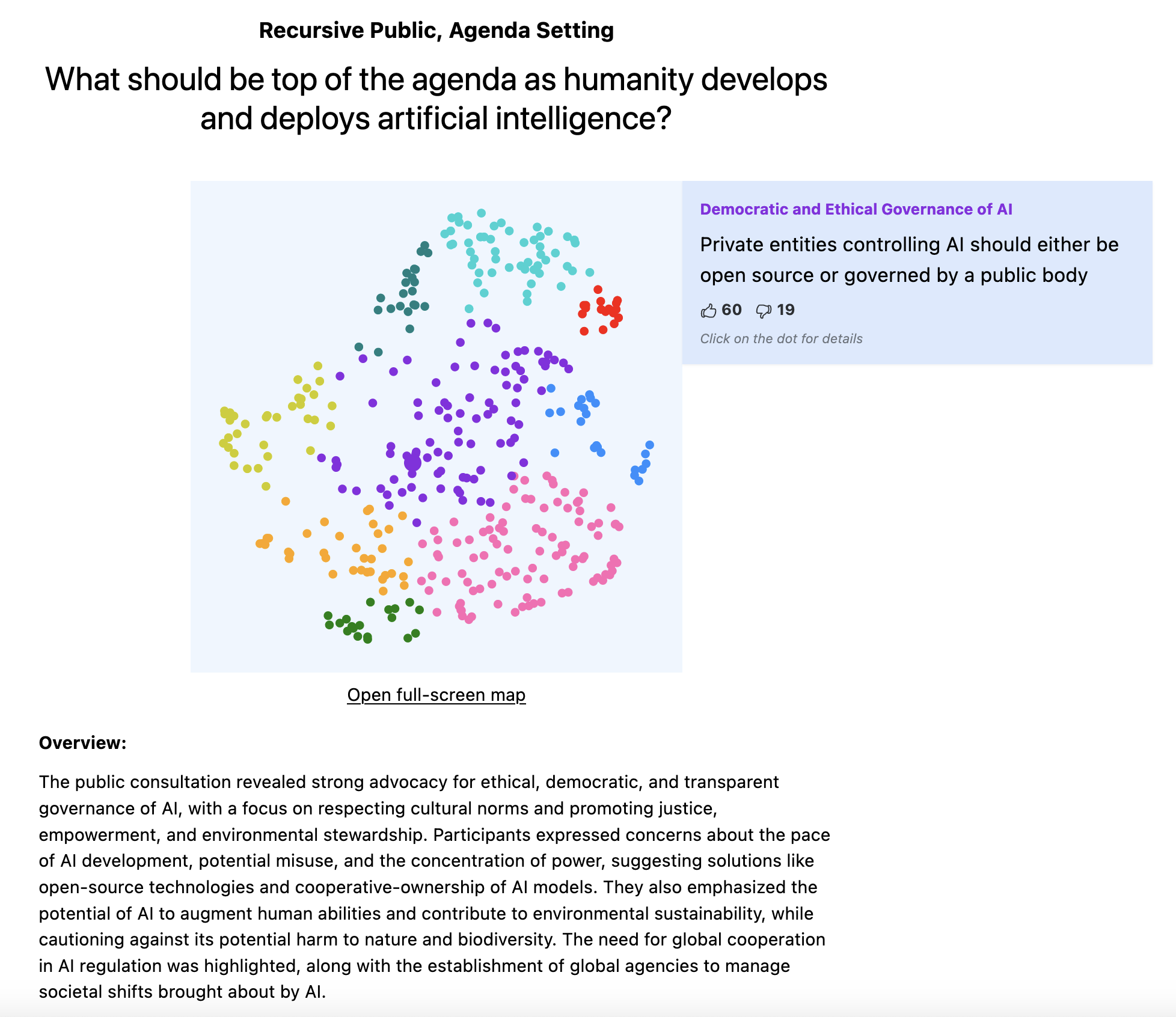

AI4Democracy: How AI Can Be Used to Inform Policymaking?

LLMs offer new capacities of particular relevance to soliciting public input when used to process large volumes of qualitative inputs and produce aggregate descriptions in natural language. In this paper, we discuss the use of an LLM-based collective decision-making tool, Talk to the City, to solicit, analyze, and organize public opinion, drawing on three current applications of the tool at varying scales.

Machina Economica, Part II: The Commodification of Risk

This is the second entry in a series on AI integration in the economy, exploring its financial, sociopolitical and historical implications. This entry focuses on economic risk and its financialisation.

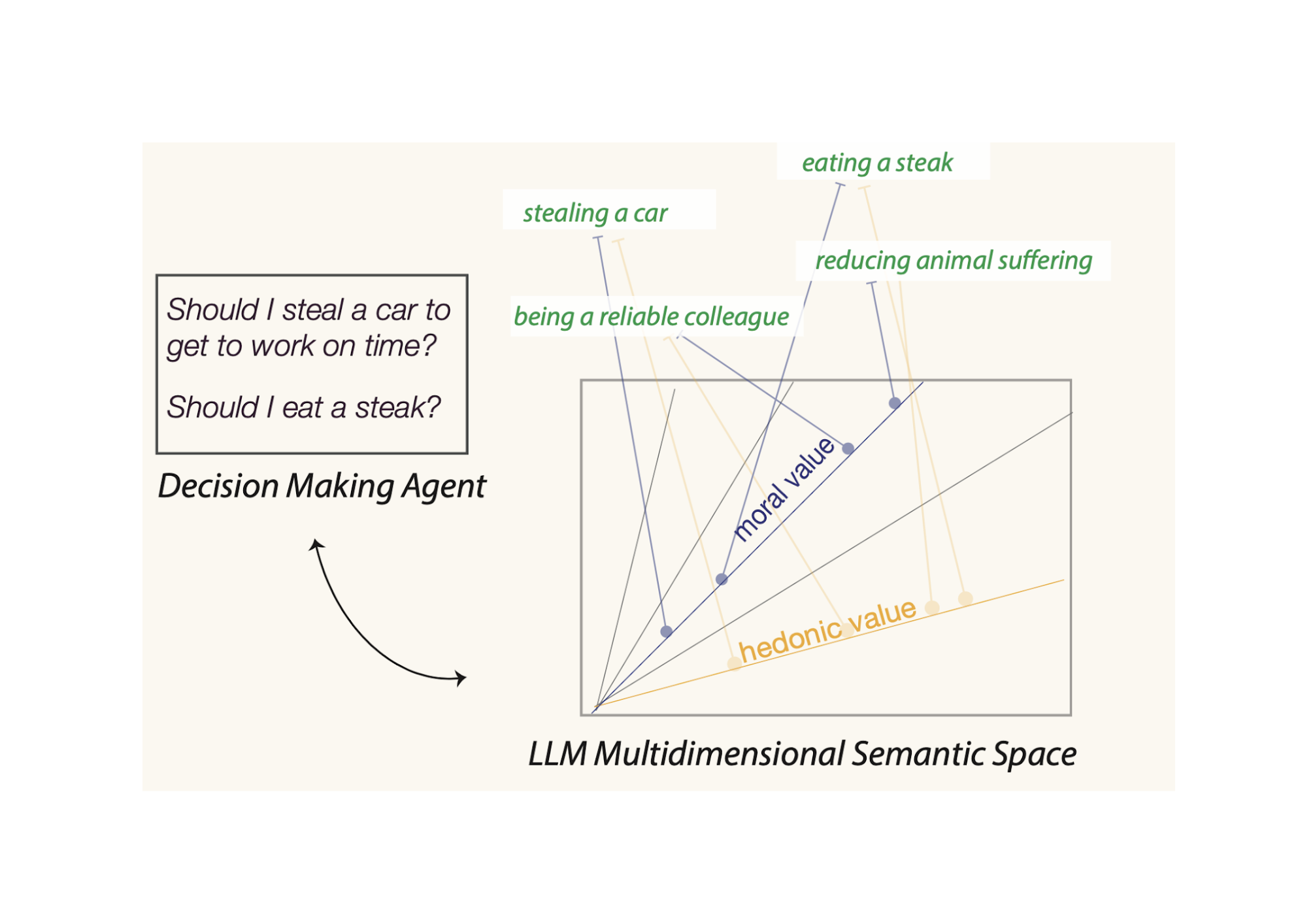

Morally Guided Action Reasoning in Humans and Large Language Models: Alignment Beyond Reward

We believe that empirically understanding LLM's’ emergent action reasoning abilities is crucial for these systems to be safe, corrigible, and beneficial. Here, we describe and motivate our approach to this research problem, as taken in our Moral Learning project – in which we use theories of human cognition to identify potential ways in which LLMs might solve action decision problems.

Machina Economica, Part I: Autonomous Economic Agents in Capital Markets

The first entry in a series on AI integration in the global economy, exploring its ethical, sociopolitical, and financial consequences. This entry focuses on financial markets and firm behaviour.

Amplifying Voices: Talk to the City in Taiwan

In the heart of Taiwan's technological and democratic evolution, Talk to the City is making significant strides. The integration of Talk to the City into Taiwanese deliberations builds on the existing vTaiwan project, by analyzing free-form text responses of respondents' opinions.

Using AI to Give People a Voice, a Case Study in Michigan

At the AI Objectives Institute, we have been exploring how modern AI technologies could assist under-resourced communities. In this post, we are sharing our learning from a recent case study, where we used our AI-powered analysis platform, Talk to the City, to help formerly incarcerated individuals raise awareness of the challenges that they face when reintegrating into society.

How AI Agents Will Improve the Consultation Process

This post explores how maybe using AI bots built with OpenAI's Custom GPT to make consultations more interactive. These AI agents could help people better understand their views and communicate them effectively during consultations, enabling more accurate input cost-effectively, though not as a replacement human facilitators.

The Problem With the Word ‘Alignment’

The purpose of our work at the AI Objectives Institute (AOI) is to direct the impact of AI towards human autonomy and human flourishing. In the course of articulating our mission and positioning ourselves -- a young organization -- in the landscape of AI risk orgs, we’ve come to notice what we think are serious conceptual problems with the prevalent vocabulary of ‘AI alignment.’ This essay will discuss some of the major ways in which we think the concept of ‘alignment’ creates bias and confusion, as well as our own search for clarifying concepts.

Modeling incentives at scale using LLMs

Humans routinely model what the incentives of other humans may be. There is however a natural limit to how much modeling and analysis a single person or a small team can do and this is where we see an opportunity to leverage LLMs.

How can LLMs help with value-guided decision making?

AOI is excited to be presenting at a NeurIPS workshop focused on morality in human psychology and AI! Our recent work draws on human moral cognition and the latest research on moral representation in large language models (LLMs). For this project, we asked, how could we use LLMs to represent value for real-world action decision making?

Talk to the City: an open-source AI tool for scaling deliberation

Talk to the City is an open-source LLM interface for improving collective deliberation and decision-making by analyzing detailed, qualitative data. It aggregates responses and arranges similar arguments into clusters.